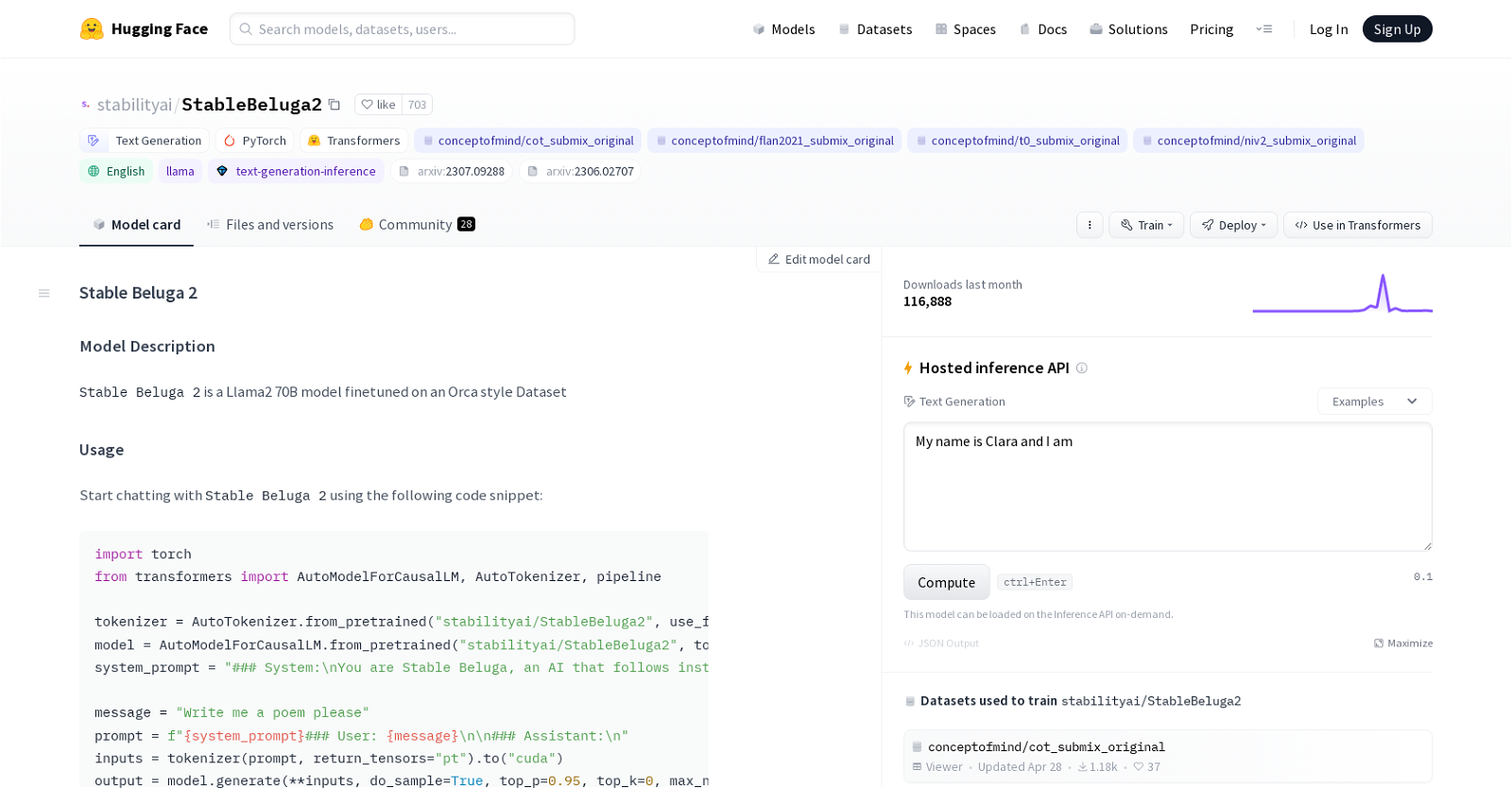

StableBeluga2 is an auto-regressive language model developed by Stability AI and fine-tuned on the Llama2 70B dataset. It is designed to generate text based on user prompts.

The model can be used for various natural language processing tasks such as text generation and conversational AI. To use StableBeluga2, developers can import the necessary modules from the Transformers library and use the provided code snippet.

The model takes a prompt as input and generates a response based on the prompt. The prompt format includes a system prompt, user prompt, and assistant output.

The model supports customization through parameters such as top-p and top-k to control the output.StableBeluga2 is trained on an internal Orca-style dataset and fine-tuned using mixed-precision (BF16) training and optimized with AdamW.

The model details include information on the model type, language (English), and the HuggingFace Transformers library used for implementation.It is important to note that like other language models, StableBeluga2 may produce inaccurate, biased, or objectionable responses in some instances.

Therefore, developers are advised to perform safety testing and tuning specific to their applications before deploying the model. For further information or to get support, developers can contact Stability AI via email.

The model also includes citations for referencing and further research.